The convergence of artificial intelligence and human cognition has reached a pivotal moment. Synthetic attention modeling emerges as a groundbreaking approach to replicate and enhance how machines process information.

As we navigate an increasingly complex digital landscape, understanding how attention mechanisms work in AI systems becomes crucial for developers, researchers, and businesses alike. This technology promises to revolutionize everything from natural language processing to computer vision, creating more intuitive and responsive systems that better understand human needs and intentions.

🧠 Understanding the Foundations of Synthetic Attention Modeling

Synthetic attention modeling represents a sophisticated computational approach that mimics the human brain’s ability to focus selectively on relevant information while filtering out noise. Unlike traditional processing methods that treat all input data equally, attention mechanisms allow AI systems to dynamically allocate computational resources to the most pertinent elements of a given task.

The biological inspiration behind this technology stems from neuroscience research demonstrating how human attention operates through a combination of bottom-up sensory processing and top-down cognitive control. When you read this sentence, your brain doesn’t process every letter with equal intensity—it identifies patterns, predicts upcoming words, and focuses on semantically important content.

Modern attention mechanisms in machine learning employ mathematical frameworks that assign weight values to different input components. These weights determine which information receives priority during processing, enabling neural networks to establish contextual relationships and dependencies across vast datasets efficiently.

The Evolution from Simple Attention to Transformer Architecture

The journey of attention mechanisms in artificial intelligence began with relatively simple implementations in recurrent neural networks. Early models struggled with long-range dependencies, often forgetting crucial information from earlier in a sequence when processing later elements.

The introduction of the Transformer architecture in 2017 marked a revolutionary breakthrough. By implementing self-attention mechanisms, Transformers enabled models to simultaneously consider all positions in a sequence, calculating attention scores between every pair of elements. This parallel processing capability dramatically improved both training efficiency and model performance.

Self-attention operates through three key components: queries, keys, and values. Each input element generates these three vectors, which interact mathematically to determine attention distributions. The query represents what information an element seeks, the key indicates what information it offers, and the value contains the actual content to be aggregated based on attention scores.

Multi-Head Attention: Expanding Perceptual Capacity 🎯

Multi-head attention mechanisms represent a significant advancement, allowing models to attend to information from different representation subspaces simultaneously. Rather than computing a single attention distribution, the model performs multiple attention operations in parallel, each focusing on different aspects of the relationships between input elements.

This architectural choice mirrors human cognitive capabilities, where we simultaneously process multiple features of stimuli—such as color, shape, motion, and semantic meaning. Each attention head can specialize in capturing specific types of patterns or relationships, from syntactic structures in language to spatial relationships in visual data.

Applications Transforming Real-World Interactions

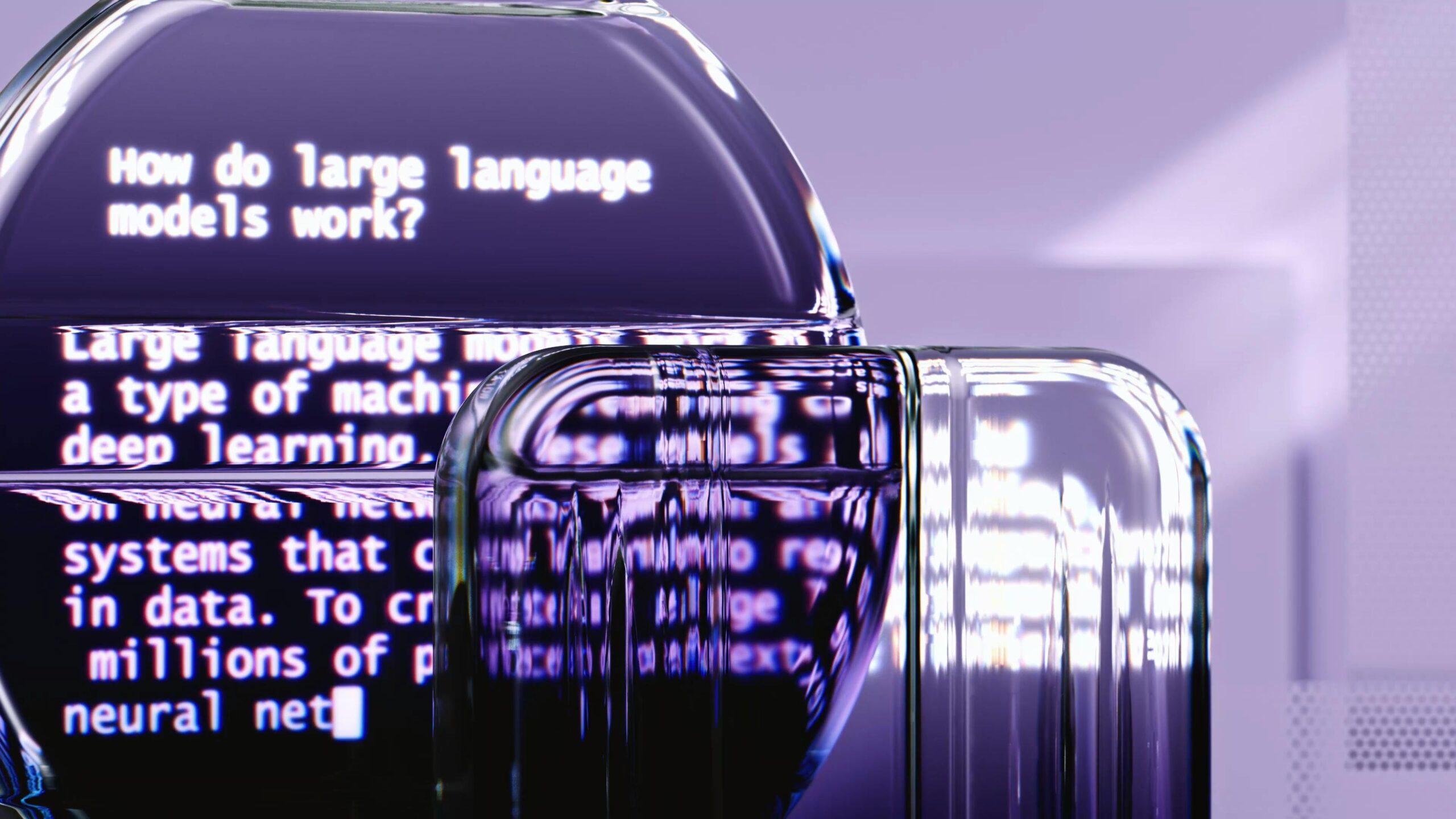

Natural language processing has been perhaps the most dramatically impacted domain. Large language models like GPT and BERT leverage attention mechanisms to achieve unprecedented understanding of context, nuance, and semantic relationships. These systems can now engage in coherent conversations, translate between languages with remarkable accuracy, and generate human-quality text across diverse domains.

In computer vision, attention models enable systems to focus on relevant regions of images dynamically. Object detection systems use spatial attention to identify and localize multiple objects simultaneously, while image captioning models coordinate visual and linguistic attention to generate accurate descriptions of complex scenes.

Healthcare Diagnostics Enhanced by Attention Mechanisms 🏥

Medical imaging analysis benefits enormously from synthetic attention modeling. Diagnostic systems can learn to focus on subtle anomalies in X-rays, MRIs, and CT scans that might escape human notice, while providing interpretable attention maps that show clinicians exactly which regions influenced the model’s diagnostic conclusions.

Predictive healthcare applications use temporal attention mechanisms to identify patterns in patient records, electronic health data, and continuous monitoring streams. These systems can flag early warning signs of deteriorating conditions by focusing on relevant historical indicators and current measurements.

The Mechanics of Training Attention-Based Models

Training robust attention mechanisms requires substantial computational resources and carefully curated datasets. The attention weights themselves are learned parameters, adjusted through backpropagation as the model optimizes its objective function across thousands or millions of training examples.

Positional encoding presents a unique challenge for attention-based architectures. Since pure attention mechanisms are permutation-invariant, they lack inherent understanding of sequential order. Positional encodings inject information about element positions through sinusoidal functions or learned embeddings, enabling models to leverage sequential structure when relevant.

Computational complexity represents another consideration, as standard self-attention scales quadratically with sequence length. For very long sequences, this becomes prohibitively expensive. Researchers have developed numerous efficient attention variants, including sparse attention patterns, linearized attention approximations, and hierarchical attention schemes that process information at multiple scales.

Addressing the Challenge of Attention Interpretability 🔍

One compelling advantage of attention mechanisms is their potential for interpretability. By examining attention weights, researchers and practitioners can gain insights into which input features the model considers important for particular predictions. Attention visualizations have become standard tools for model analysis and debugging.

However, interpreting attention patterns requires caution. High attention weights don’t necessarily indicate causal relationships, and attention distributions can be influenced by technical factors like parameter initialization and optimization dynamics. Rigorous analysis methods are emerging to distinguish meaningful attention patterns from artifacts of the learning process.

Enhancing Human-Machine Interaction Through Contextual Understanding

The most transformative impact of synthetic attention modeling may be in creating AI systems that interact with humans more naturally and intuitively. Attention mechanisms enable models to maintain context across extended dialogues, remember relevant details from earlier exchanges, and adapt their responses based on conversational dynamics.

Virtual assistants and chatbots leveraging attention-based architectures can distinguish between multiple topics in complex queries, identify which aspects of user input require clarification, and generate responses that address all relevant points coherently. This represents a substantial improvement over earlier systems that struggled with anything beyond simple command-response patterns.

Personalization at Scale Through Adaptive Attention 💬

Recommendation systems employ attention mechanisms to model user preferences dynamically, considering both long-term interests and recent interactions. By attending to different aspects of user history and item features based on context, these systems deliver more relevant suggestions while adapting to changing preferences over time.

Content moderation platforms use attention-based models to identify problematic material across text, images, and video simultaneously. These systems can focus on suspicious elements within complex multimedia content, balancing sensitivity and specificity while operating at the massive scale required by modern social platforms.

Cross-Modal Attention: Bridging Different Information Types

Perhaps the most exciting frontier involves cross-modal attention mechanisms that coordinate information across different sensory modalities. Vision-and-language models use cross-attention to align visual features with linguistic descriptions, enabling applications like visual question answering, image-text retrieval, and multimodal content generation.

Audio-visual speech recognition systems leverage cross-modal attention to integrate lip movements with acoustic signals, dramatically improving accuracy in noisy environments. The attention mechanism learns which visual cues correspond to specific phonetic elements, providing complementary information when audio quality degrades.

Robotic systems benefit from cross-modal attention that coordinates visual perception with tactile feedback, proprioceptive information about joint positions, and high-level task specifications. This integrated approach enables more sophisticated manipulation capabilities and adaptive behavior in unstructured environments.

Emerging Architectures and Future Directions 🚀

Research continues to push the boundaries of attention mechanism design. Sparse attention patterns reduce computational requirements while maintaining model capacity, making it feasible to process extremely long sequences efficiently. Techniques like Longformer and BigBird implement specialized attention patterns that balance local and global information access.

Continuous attention mechanisms extend beyond discrete tokens to operate over continuous representations, particularly valuable for processing signals like audio waveforms or sensor streams directly without explicit segmentation.

Neural architecture search techniques now optimize attention mechanism configurations automatically, discovering novel patterns that outperform hand-designed alternatives. These meta-learning approaches may reveal attention strategies that haven’t occurred to human designers.

Biological Plausibility and Neuromorphic Implementations

Researchers are exploring more biologically plausible attention mechanisms that better mirror neural processes observed in neuroscience studies. These approaches might incorporate mechanisms like divisive normalization, lateral inhibition, and oscillatory dynamics that characterize biological attention systems.

Neuromorphic hardware implementations promise to execute attention computations with dramatically improved energy efficiency. By mapping attention operations onto specialized neuromorphic chips that process information through event-driven, asynchronous dynamics, these systems could enable sophisticated AI capabilities in resource-constrained edge devices.

Practical Implementation Considerations for Developers

Implementing attention mechanisms requires understanding both the theoretical foundations and practical engineering considerations. Modern deep learning frameworks provide high-level attention layers that abstract away many implementation details, but effective application still demands careful design choices.

Key hyperparameters include the number of attention heads, the dimensionality of query-key-value representations, and the application of dropout regularization to attention weights. These choices significantly impact both model performance and computational requirements, necessitating systematic experimentation and validation.

Attention mechanism performance varies substantially across domains and tasks. Some applications benefit from dense, full attention over all inputs, while others achieve better results with constrained attention patterns that incorporate domain-specific inductive biases. Successful deployment requires empirical validation with representative datasets and use cases.

The Synergy Between Human Focus and Machine Attention 🤝

The ultimate promise of synthetic attention modeling lies in creating systems that complement rather than replace human cognitive capabilities. By offloading routine attention tasks to AI systems, humans can concentrate their limited attentional resources on higher-level reasoning, creative problem-solving, and ethical judgment that remain beyond machine capabilities.

Collaborative intelligence systems leverage this synergy, using machine attention to filter information, identify patterns, and highlight anomalies, while relying on human attention for final decisions, contextual interpretation, and handling of edge cases. This partnership model represents a more realistic and beneficial vision of AI integration than simplistic automation narratives.

As attention mechanisms continue advancing, they promise to make AI systems more transparent, controllable, and aligned with human values. By understanding what our AI systems attend to and why, we gain crucial insight into their decision-making processes and the ability to guide them toward more beneficial outcomes.

Building More Intelligent Systems Through Attention Mastery

Mastering synthetic attention modeling requires continuous learning and adaptation as the field evolves rapidly. The principles underlying attention mechanisms—selective information processing, dynamic resource allocation, and context-sensitive prioritization—will remain relevant even as specific implementations advance.

For organizations seeking to leverage these technologies, investing in expertise and infrastructure around attention-based architectures yields substantial competitive advantages. From improving customer experiences through more responsive interfaces to extracting insights from complex data, attention mechanisms enable capabilities that were simply impossible with earlier approaches.

The journey toward truly intelligent, context-aware AI systems has only begun. Synthetic attention modeling provides essential building blocks, but realizing the full potential requires ongoing research, thoughtful application, and careful consideration of societal implications. As we continue refining these technologies, we move closer to AI systems that genuinely understand and effectively support human needs and aspirations.

Toni Santos is a digital philosopher and consciousness researcher exploring how artificial intelligence and quantum theory intersect with awareness. Through his work, he investigates how technology can serve as a mirror for self-understanding and evolution. Fascinated by the relationship between perception, code, and consciousness, Toni writes about the frontier where science meets spirituality in the digital age. Blending philosophy, neuroscience, and AI ethics, he seeks to illuminate the human side of technological progress. His work is a tribute to: The evolution of awareness through technology The integration of science and spiritual inquiry The expansion of consciousness in the age of AI Whether you are intrigued by digital philosophy, mindful technology, or the nature of consciousness, Toni invites you to explore how intelligence — both human and artificial — can awaken awareness.