Artificial intelligence is rapidly transforming our world, but understanding how these systems think remains one of technology’s greatest challenges. Cognitive transparency models offer a pathway to make AI not just powerful, but comprehensible, reliable, and aligned with human values.

As AI systems become increasingly integrated into critical decision-making processes across healthcare, finance, criminal justice, and autonomous vehicles, the black-box nature of many algorithms raises serious concerns. When an AI denies a loan application, recommends a medical treatment, or controls a self-driving car, stakeholders deserve to understand the reasoning behind these consequential decisions. This is where cognitive transparency becomes essential for building trustworthy AI systems.

🧠 What Are Cognitive Transparency Models in AI?

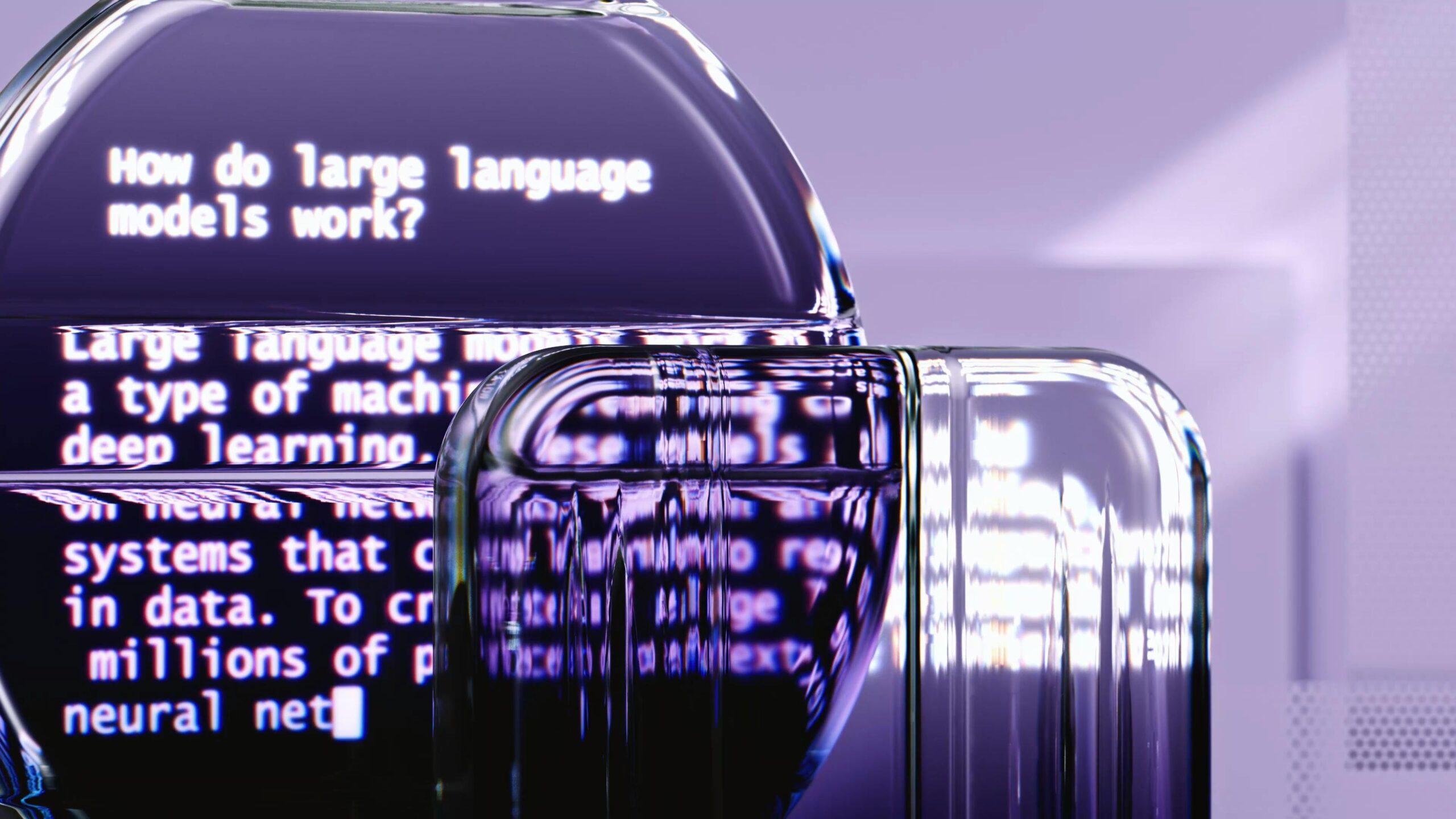

Cognitive transparency models represent frameworks and methodologies designed to make artificial intelligence systems interpretable and explainable. Unlike traditional black-box algorithms where inputs mysteriously transform into outputs, transparent models reveal their internal reasoning processes, decision pathways, and the factors influencing their conclusions.

These models bridge the gap between machine logic and human understanding. They translate complex mathematical operations, neural network activations, and algorithmic processes into formats that stakeholders can comprehend, evaluate, and trust. The goal isn’t simply to expose raw code or data structures, but to provide meaningful insights into how AI systems arrive at their decisions.

The Distinction Between Interpretability and Explainability

While often used interchangeably, interpretability and explainability represent different aspects of transparency. Interpretability refers to the degree to which humans can understand the cause of a decision directly from the model’s structure. Simple linear regression models are inherently interpretable because the relationship between inputs and outputs is straightforward.

Explainability, conversely, involves providing after-the-fact justifications for model decisions. Deep neural networks may lack inherent interpretability, but explanation methods can highlight which features influenced particular predictions. Both approaches contribute to cognitive transparency, offering different lenses through which to understand AI behavior.

⚙️ Core Components of Transparent AI Systems

Building genuinely transparent AI requires attention to multiple architectural and operational elements. These components work synergistically to create systems that balance performance with understandability.

Model Architecture Selection

The foundation of transparency begins with choosing appropriate model architectures. Decision trees, rule-based systems, and linear models offer inherent interpretability, making their logic accessible without additional explanation tools. While these simpler models may sacrifice some predictive power compared to deep learning approaches, they provide immediate transparency benefits for applications where understanding is paramount.

When complex models like deep neural networks are necessary, architectural choices still matter. Attention mechanisms in transformer models, for instance, reveal which input elements the model focused on when generating outputs. Modular neural architectures can isolate different reasoning components, making each subsystem easier to analyze independently.

Feature Engineering and Selection

Transparent AI systems benefit enormously from thoughtful feature engineering. Using interpretable features that correspond to concepts humans understand naturally enhances transparency. Medical diagnosis systems that rely on clinically meaningful biomarkers prove more transparent than those operating on raw pixel data from medical images.

Feature importance techniques reveal which variables most strongly influence model predictions. Methods like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) quantify individual feature contributions, helping stakeholders understand not just what the model decided, but why those specific factors mattered.

Decision Pathway Visualization

Cognitive transparency extends beyond static explanations to dynamic visualization of decision pathways. Interactive tools that trace how input data flows through model layers, showing activations and transformations at each stage, demystify complex processing pipelines.

These visualizations serve multiple audiences. Technical teams use them for debugging and model improvement. Domain experts verify that model reasoning aligns with professional knowledge. End users gain confidence through seeing logical decision processes rather than inexplicable outputs.

🛡️ Why Cognitive Transparency Matters for AI Safety

The safety implications of AI transparency cannot be overstated. As autonomous systems gain authority over consequential decisions, their opacity creates unacceptable risks. Transparent models enable proactive identification of failures, biases, and misalignments before they cause harm.

Detecting and Mitigating Bias

Bias in AI systems perpetuates and amplifies societal inequities when left unchecked. Facial recognition systems that perform poorly on darker skin tones, hiring algorithms that discriminate against women, and predictive policing tools that target minority communities demonstrate the real-world consequences of opaque AI.

Cognitive transparency models expose these biases by revealing which features drive predictions and how different demographic groups receive disparate treatment. When engineers can see that a hiring algorithm overweights traditionally male-dominated job titles or undervalues career gaps common among caregivers, they can implement corrections before deployment.

Enabling Meaningful Human Oversight

Human-in-the-loop systems require transparency to function effectively. Medical professionals using AI diagnostic tools need to understand recommendation rationale to integrate AI insights with their clinical judgment appropriately. Financial advisors require transparency to explain investment algorithm recommendations to clients.

Without cognitive transparency, human oversight becomes superficial rubber-stamping rather than meaningful supervision. Transparent models empower humans to identify situations where AI reasoning diverges from appropriate standards, enabling intervention when necessary.

Facilitating Regulatory Compliance

Regulatory frameworks increasingly mandate AI explainability. The European Union’s General Data Protection Regulation (GDPR) grants individuals rights to explanation for automated decisions affecting them. Financial regulations require transparent credit scoring. Medical device regulations demand clear documentation of AI decision processes.

Cognitive transparency models aren’t just ethical imperatives but practical necessities for regulatory compliance. Organizations deploying opaque AI systems face legal exposure and potential market exclusion as transparency requirements expand globally.

🔍 Technical Approaches to Building Transparent AI

Multiple technical methodologies enable cognitive transparency, each with particular strengths for different applications and use cases. Understanding these approaches helps organizations select appropriate transparency strategies.

Intrinsically Interpretable Models

The most straightforward path to transparency involves using models that are inherently understandable. Generalized Additive Models (GAMs) decompose predictions into individual feature contributions that can be visualized as simple curves. Rule-based systems express logic in if-then statements that mirror human reasoning patterns.

Recent innovations have expanded interpretable model capabilities while maintaining transparency. Neural Additive Models combine neural networks’ flexibility with GAMs’ interpretability. Explainable Boosting Machines deliver competitive predictive performance using entirely transparent architectures.

Post-Hoc Explanation Methods

When application requirements demand complex black-box models, post-hoc explanation techniques provide transparency overlays. SHAP values decompose any model’s predictions into additive feature contributions, offering consistent explanations across model types. LIME generates local approximations of complex models using simple, interpretable surrogates.

Counterfactual explanations answer “what-if” questions by identifying minimal input changes that would alter model decisions. If a loan application is denied, counterfactual methods might reveal that a $5,000 higher income or two years longer employment history would have resulted in approval, providing actionable transparency.

Attention and Saliency Mapping

For models processing images, text, or other high-dimensional data, attention mechanisms and saliency maps reveal which input regions influenced outputs. Grad-CAM highlights image areas a convolutional neural network focused on when making classifications. Attention weights in transformer models show which words or tokens the model prioritized.

These techniques provide intuitive transparency for perceptual tasks. Medical professionals can verify that a pneumonia detection system actually analyzed lung tissue rather than spurious correlations with medical equipment. Content moderators can confirm that toxicity classifiers responded to genuinely problematic language rather than innocent keywords.

💼 Real-World Applications of Cognitive Transparency

Across industries, cognitive transparency models are transforming how organizations deploy AI, building trust and unlocking value in previously problematic domains.

Healthcare Diagnostics and Treatment Planning

Medical AI systems exemplify transparency’s critical importance. Clinicians require clear reasoning to integrate AI recommendations into patient care responsibly. Transparent diagnostic models that highlight relevant symptoms, lab values, and imaging features enable doctors to verify AI logic against medical knowledge.

Treatment recommendation systems with cognitive transparency explain why specific therapies are suggested based on patient characteristics, similar case outcomes, and clinical guidelines. This transparency facilitates informed shared decision-making between clinicians and patients, improving both care quality and patient satisfaction.

Financial Services and Credit Assessment

Financial institutions face stringent transparency requirements for credit decisions. Traditional credit scoring relied on interpretable statistical models specifically to meet these needs. As machine learning promises improved risk assessment, maintaining transparency becomes challenging but essential.

Modern transparent AI systems in finance combine predictive power with explainability. They identify specific factors contributing to credit decisions, enabling loan officers to discuss decisions with applicants. This transparency also helps institutions demonstrate fair lending compliance and identify potential discriminatory patterns proactively.

Autonomous Vehicles and Robotics

Self-driving vehicles make split-second decisions with life-or-death consequences. While real-time decision transparency for passengers may be impractical, post-incident analysis requires detailed understanding of vehicle reasoning. Transparent models enable engineers to reconstruct decision pathways following accidents, identifying whether vehicles responded appropriately to sensor inputs.

This transparency accelerates improvement cycles, helps establish liability in accidents, and builds public trust necessary for autonomous vehicle adoption. Regulators increasingly demand explainable AI in autonomous systems as prerequisite for deployment approval.

🚀 The Future of Transparent AI: Emerging Trends

Cognitive transparency research continues advancing rapidly, with several promising directions poised to enhance AI interpretability and trustworthiness further.

Neural-Symbolic Integration

Hybrid approaches combining neural networks’ pattern recognition capabilities with symbolic AI’s logical reasoning offer exciting transparency potential. These systems can learn from data like neural networks while expressing knowledge in human-readable symbolic formats. The resulting models leverage deep learning’s power while maintaining interpretability through explicit logical rules.

Interactive Explanation Interfaces

Rather than static explanations, next-generation transparent AI systems provide interactive dialogue where users can probe model reasoning through natural language questions. These conversational interfaces let stakeholders explore different aspects of decisions based on their specific concerns and expertise levels.

Transparency Standards and Certification

Industry coalitions and standards bodies are developing formal transparency requirements and certification processes. These frameworks establish consistent expectations for AI explainability across sectors, enabling organizations to demonstrate transparency credentials and helping procurement teams evaluate vendor solutions.

⚖️ Balancing Transparency with Other AI Objectives

While cognitive transparency delivers substantial benefits, it exists in tension with other important considerations. Navigating these tradeoffs requires nuanced judgment based on application context.

The Accuracy-Interpretability Tradeoff

More interpretable models sometimes sacrifice predictive accuracy compared to black-box alternatives. For low-stakes applications, this tradeoff favors transparency. However, when accuracy directly impacts safety or efficacy, accepting some opacity for substantially better performance may be appropriate, supplemented with post-hoc explanation methods.

Intellectual Property and Competitive Advantage

Excessive transparency can expose proprietary algorithms and training data, undermining competitive positions. Organizations must balance stakeholder transparency needs against legitimate intellectual property protection. Strategic transparency—revealing sufficient reasoning for trust without disclosing sensitive implementation details—offers middle ground.

Adversarial Exploitation Risks

Complete transparency potentially enables adversarial attacks by revealing exactly how to manipulate model inputs for desired outputs. Spam filters that fully explain their logic become easier to evade. Security-critical applications require carefully calibrated transparency that satisfies accountability needs without providing attack blueprints.

🌐 Building a Culture of Transparent AI Development

Technical solutions alone cannot achieve genuine AI transparency. Organizational culture, development practices, and stakeholder engagement prove equally essential for creating truly trustworthy systems.

Cross-functional teams including domain experts, ethicists, and end-user representatives should participate throughout AI development lifecycles. Their diverse perspectives identify transparency requirements that purely technical teams might overlook. Documentation practices should emphasize not just what models do, but why design choices were made and what limitations exist.

Organizations committed to transparent AI invest in education, ensuring technical teams understand explanation techniques and stakeholders can meaningfully interpret explanations provided. This bidirectional knowledge transfer creates shared understanding necessary for transparency to generate actual trust rather than mere compliance theater.

🎯 Practical Steps Toward Implementing Cognitive Transparency

For organizations seeking to enhance AI transparency, concrete implementation pathways transform aspirations into reality. Begin by auditing existing AI systems, identifying which decisions most critically require explanation based on stakes, regulatory requirements, and stakeholder needs.

Prioritize transparency investments where they deliver maximum value. Customer-facing applications, high-stakes decisions, and legally regulated domains typically justify significant transparency efforts. Internal operational systems with limited consequences may warrant lighter-touch approaches.

Establish transparency metrics and evaluation frameworks. How do different stakeholder groups perceive explanation quality? Can domain experts verify that model reasoning aligns with accepted principles? Do end users report increased trust and appropriate reliance on AI recommendations? Systematic assessment ensures transparency efforts achieve intended objectives.

Partner with researchers and solution providers specializing in explainable AI. The field evolves rapidly, and external expertise accelerates adoption of cutting-edge transparency techniques. Open-source explanation libraries and commercial transparency platforms offer accessible starting points for organizations beginning their transparency journeys.

🔮 The Transformative Potential of Truly Transparent AI

Cognitive transparency models represent far more than technical enhancements—they embody a fundamental reimagining of the relationship between humans and artificial intelligence. By making AI systems comprehensible, we transform them from inscrutable oracles into collaborative partners whose reasoning we can evaluate, trust, and appropriately rely upon.

This transparency unlocks AI’s full potential across domains where opacity currently limits adoption. When doctors trust AI diagnostics, patients receive better care. When financial institutions explain credit decisions fairly, economic opportunity expands. When autonomous systems operate transparently, public acceptance accelerates beneficial technology deployment.

The path forward requires sustained commitment from researchers, developers, policymakers, and organizations deploying AI systems. Technical innovation must continue advancing transparency capabilities while regulatory frameworks establish appropriate requirements and incentives. Most fundamentally, we must collectively embrace transparency as a core value in AI development, not an afterthought or obstacle to overcome.

As artificial intelligence increasingly shapes critical aspects of modern life, cognitive transparency models offer the key to ensuring these powerful systems remain comprehensible, accountable, and aligned with human values. The future of AI is not just smart—it’s transparent, trustworthy, and worthy of the confidence we place in systems shaping our world.

Toni Santos is a digital philosopher and consciousness researcher exploring how artificial intelligence and quantum theory intersect with awareness. Through his work, he investigates how technology can serve as a mirror for self-understanding and evolution. Fascinated by the relationship between perception, code, and consciousness, Toni writes about the frontier where science meets spirituality in the digital age. Blending philosophy, neuroscience, and AI ethics, he seeks to illuminate the human side of technological progress. His work is a tribute to: The evolution of awareness through technology The integration of science and spiritual inquiry The expansion of consciousness in the age of AI Whether you are intrigued by digital philosophy, mindful technology, or the nature of consciousness, Toni invites you to explore how intelligence — both human and artificial — can awaken awareness.